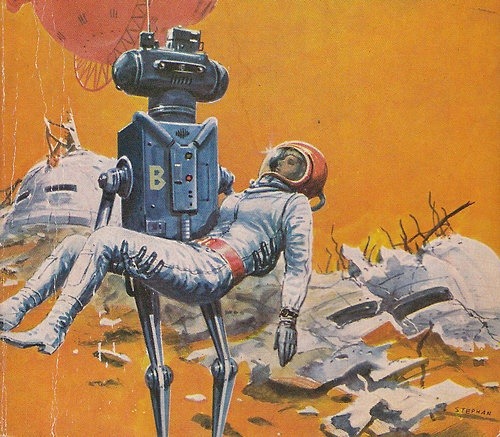

Many of us have read at least one or two science fiction classics where humans co-exist with robots. One of those is Cal from Isaac Asmiov’s collection of robot short stories. It follows the tale of a robot, “Cal”, who is programmed by his author master to write crime novels after he expressed a supposed desire to also write fiction. However, he encounters difficulty in completing the books, because in this particular genre harm comes to humans, and this goes against the first law of robotics, which is not to harm any humans, real or fictional. When the author instead has Cal write humorous stories, he notices that the robot is a better writer than him and seeks to have him “dumbed down” so that the robot’s creative talent won’t overshadow his own. The robot, becoming aware of this plot against him, decides he must eliminate his master in order to continue perusing his own desires.

Then there is the malevolent extreme in the form of “AM”, the sentient supercomputer, from Harlan Ellison’s I Have No Mouth, and I Must Scream. An artificial intelligence whose hatred for the humans who created him runs so far and so deep that he cannot fully articulate his level of hatred even with his advanced processing systems. The handful of humans he has allowed to remain alive are tortured by him in various manners for decades until they finally find a way to cause their own deaths, the only escape from his planet of horrors. All except one of them that is; Ted only one remaining from the group must face AM’s retaliatory rage all on his own. The result is that AM converts Ted into a shapeless blob, doomed to spend the rest of eternity at the hands of the evil supercomputer, without even the ability to scream at his captor.

Are these pieces of fiction a foreshadowing of what our own future entails? Or will the relationship between artificial intelligence and humankind be more akin to a partnership?

With the advent of personal assistant style chatbots in the 2000’s to the development of more advance Large Language Models (LLMs) such as Chat GPT in the last ten years, more and more questions are being asked about the ethics involved with their use. Some tech experts are warning about the possibility of “rogue” AI’s in our not too distant future, while others are dismissing these notions as just fear mongering. Either way, we have to take a closer look at our relationship with LLMs, especially for the modern day writer.

Even for those that do not wish to use AI in their everyday work, I believe they should be aware of it and how it can be utilized ethically and productively. The majority of large corporations and clients are looking to implement AI tools in their day to day business, so it is advantageous to understand their potential for use and their limitations. Through my work with LLMs, their testing and development, at this point in their evolution, I see them as not so much an “intelligence”, but rather as extremely efficient analytical machines. Thus, if one choses to use an AI assistant or is mandated to do so by their client or employer, they must understand the correct methodology of use and the limits of the particular model. LLMs, even the most powerful ones, are not infallible, and can commit a variety of errors known as hallucinations. A common use for AI assistants is to edit text, such as articles and short stories, however, the longer the excerpt that is provided, the more chance for an error. On the one hand they allow for the independent author to have access to to an editing service without the need to pay a human proofreader, but the LLM still does not have the capability nor nuance of an experienced human brain editing a text.

AI models also have a distinctive writing style, though experienced users can tweak the outputs through specific prompts (written instructions), the majority of users will use the generic results that the model provides. If these results are not modified by a human, it will be noticeable that they were written by an LLM.

In conclusion, I believe that LLM and AI assistants can be useful, but that they are being implemented at a rate that does not match their current level of development. They are not advanced enough for the work they are being entrusted to produce and human discretion and annotation is still required whenever working with them. The modern author should become familiar with them as it is inevitable that they will encounter LLMs and their outputs. We are still in the initial era of the co-existence of man and machine and there is still time to ensure that our relationship is a productive one.

Know they enemy. Know thyself.

Leave a comment